Benchmarking our Website Vulnerability Scanner and 5 others

In February 2024, we set out to compare our Website Vulnerability Scanner against some of the established names in Dynamic Web Application Security Testing, both commercial and open-source: Burp Scanner, Acunetix, Qualys, Rapid7 InsightAppSec, and ZAP.

Why did we do this? A look behind the scenes

We are relatively new in the market. Our scanner was rebuilt from scratch in the autumn of 2020. Four years and over 2.5 million websites scanned later we wanted to see how we compare to the existing alternatives on the market.

As web security specialists usually know, there is no way of comparing products via standard benchmarks or information from vendors. All you have are random reviews scattered around the web. You can either trust these reviews or build your own web security testing lab tailored to your use case. And then spend the time and energy to test all these tools in a consistent way.

Leaning into our curious search for ways to improve it, we decided to put our scanner in a battle royale. The goal of the benchmark was not to brag about how good our scanner is (although we had high hopes for it), but to take an honest look at what we do well, what we suck at, and what we can learn from other tools.

We approached this through the lens of an AppSec engineer tasked with choosing a DAST (Dynamic Application Security Testing) tool to monitor the company’s web apps. Below you’ll find out:

how we approached the problem

how we chose the targets

what the results were

and what we’ll do about them going forward.

The choice of competitors was based on two criteria: how popular they are in the community and how easy it is to get access to a license/trial account.

Building a benchmark for web app scanners

Fundamentally, when assessing a DAST tool, an application security engineer cares about three things:

The breadth of application functionality the scanner can crawl. A scanner can only look for vulnerabilities in an endpoint if it manages to crawl it.

The number and types of vulnerabilities the scanner is capable of finding.

The level of trust in the accuracy of the vulnerabilities the scanner reports. Any false positive reported by the scanner translates to wasted time.

For the benchmark to satisfy these requirements, the deliberately vulnerable app used for testing must:

Contain a wide variety of functionalities that cover most of what a real web application can do.

Be built on tech stacks that accurately reflect the industry’s current trends.

Be transparent: knowing all the vulnerabilities that ought to be found.

For this benchmark, we decided to only look at the vulnerability detection components: how the vulnerabilities each scanner reported compare to the reality of the target’s security posture.

For each scanner, we measured:

the true positive rate: how many of the vulnerabilities the scanner reported actually exist

the false positive rate: how many of the vulnerabilities the scanner reported are not actually there

the false negative rate: how many of the vulnerabilities that exist were not reported by the scanner.

To maintain objectivity, we introduced another requirement: the testbed is not built by any of the companies behind the tools included in the benchmark. This prevents any particular scanner from having an advantage in the form of a testbed that’s fine-tuned for them.

With these requirements in mind, we settled on two targets: Broken Crystals and DVWA.

We chose Broken Crystals for the wide range of technologies it uses and the vulnerabilities it exposes:

it is built on a modern frontend framework, React, making it possible a challenge for crawlers which might result in fewer vulnerabilities found.

it uses both a REST and a GraphQL API, with some vulnerabilities detectable only if the scanner knows how to work with these technologies.

it contains a wide variety of vulnerabilities, from classic XSS, SQL injection, and the like, to modern vulnerabilities arising from flawed JWT and GraphQL implementations.

We also chose DVWA because it is a classic. Traditional applications, that is, non-single page, still reflect a big portion of the web. Additionally, given its notoriety, we wanted to see how close to 100% detection the scanners can get.

Shortcomings of a web app scanners benchmark

We are big fans of benchmarks here in the Website Scanner team. We use them to assess our scanner on all kinds of metrics:

Speed and resource usage

Vulnerability detection

Crawling breadth on different types of technologies

All this experience has also shown us where a benchmark usually falls short: false positives.

We’ve designed intricate detections for vulnerabilities, wrote small benchmarks to make sure they work as intended, only to watch them fail gloriously against the beast that is the Internet. We’ve had all kinds of false positives: from those that we should’ve thought about to obscure edge cases that happened only on one specific version of a specific technology, to those we don’t understand to this day.

It’s important to understand that, even if a scanner will perform exemplarily on a benchmark, that performance won’t necessarily manifest the same way in the real world.

On our part, we are trying to bridge this gap by making it as easy as possible for our customers to report issues with scan results so we can work on fixing them. Additionally, we’re working on running our scanner continuously against bug bounty programs that allow it. This is sure to produce both a lot of fun and gray hairs, so maybe we’ll write an article about that.

How we set the testbed

We deployed both applications to a VPS in the cloud. The setup was as simple as a docker compose up or docker run since both offer Dockerized versions.

We scanned both applications individually with each tool. After a scanner was done, the application was reset to its initial state. We did this to make sure the scanners didn’t interfere with each other.

For this benchmark, we used the latest stable version of every scanner as of February 2024.

We manually configured each of them to use their most comprehensive crawling strategy and try all the available vulnerability detections.

Where available, we manually specified the REST API swagger files that defined the API and the GraphQL endpoint to be scanned.

Last, but not least, where possible, we configured each scanner to run and tried to validate the authentication was successful to the best of our abilities.

It would have been easier for an independent reviewer to validate our findings if we would have used the default scan settings. The problem was that, by default, some scanners would exclude a lot of vulnerability detection modules, making the results inaccurate.

What were the results for Broken Crystals?

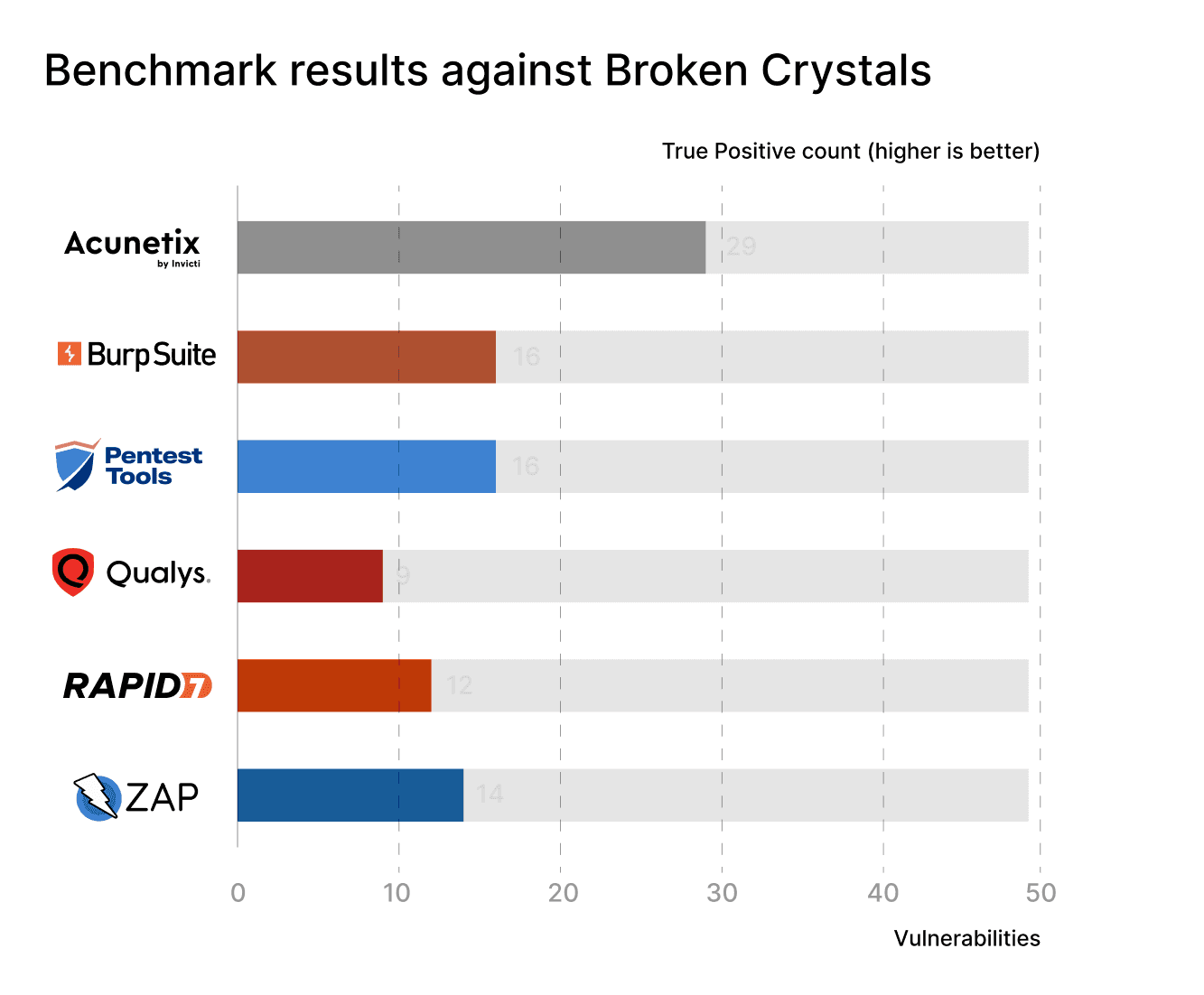

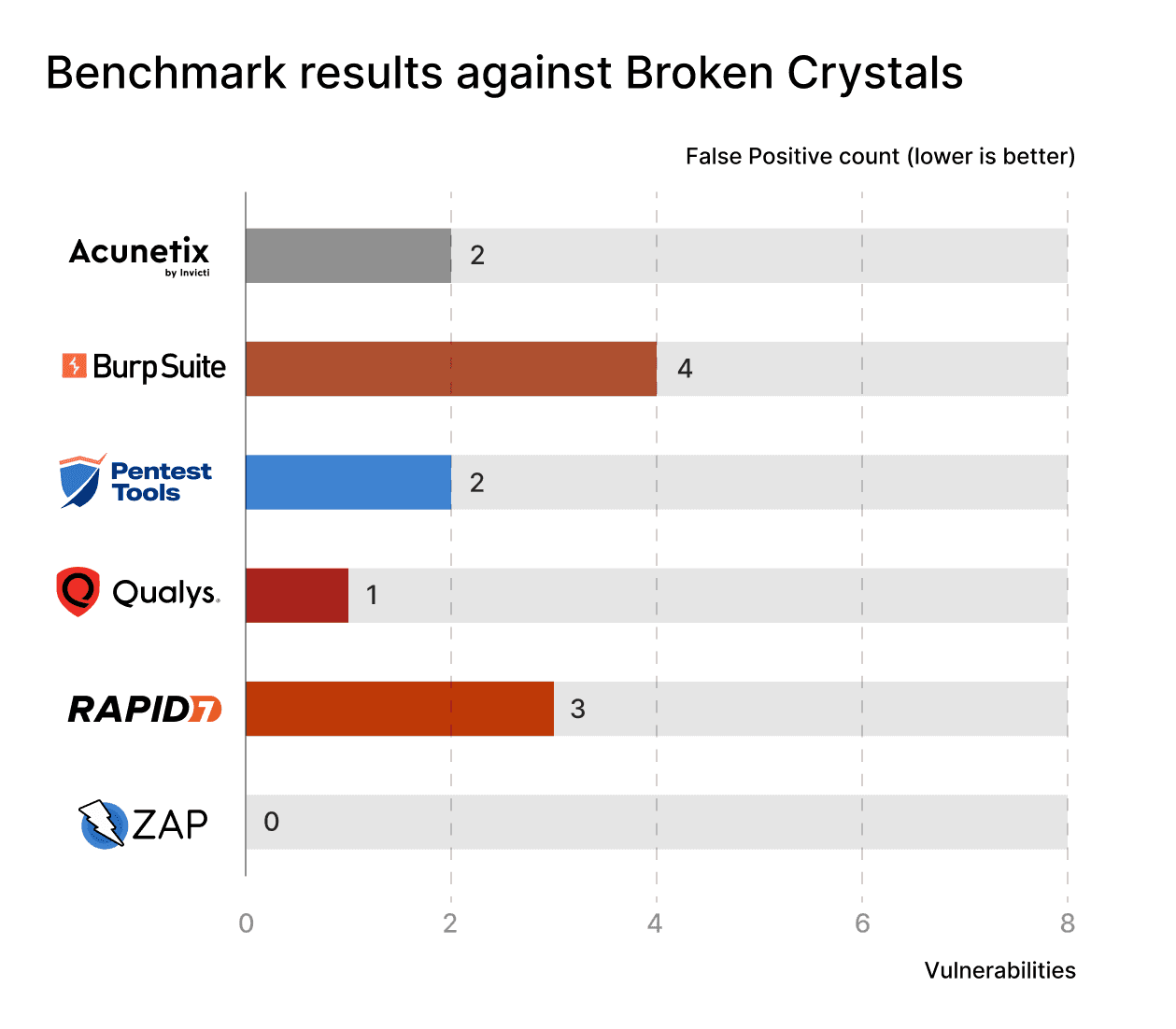

For Broken Crystals, Acunetix came in first, by a pretty big margin. Our Website Vulnerability Scanner landed in second place, tied with Burp Suite. Surprisingly, ZAP surpassed both Qualys and Rapid7 and claimed an honorable 4th place.

On the False Positive front, things were quite even: all scanners detected some false positives, but none of them really stood out in a negative way. The differences were small enough to consider the scanners evenly matched.

What were the results for DVWA?

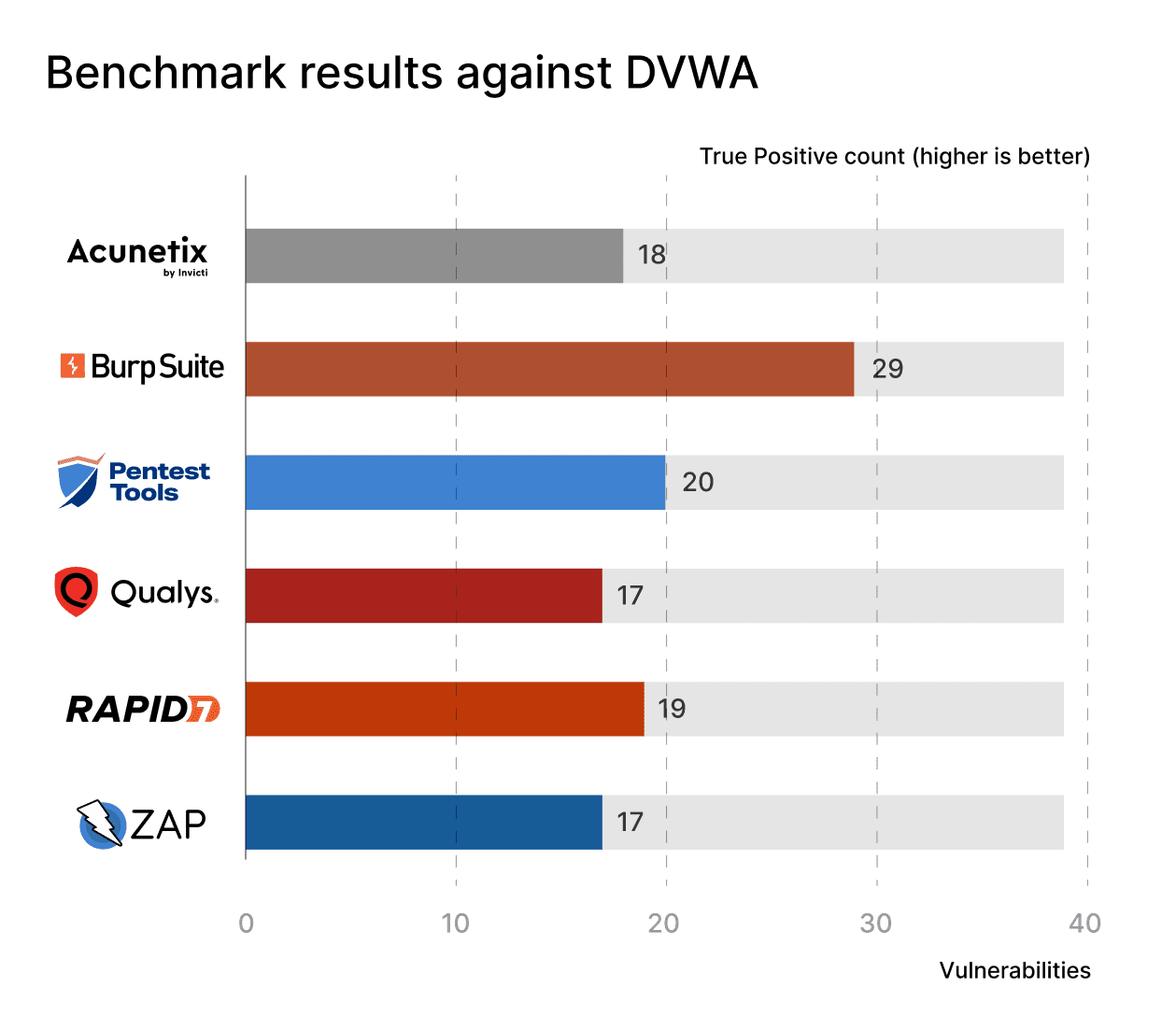

Burp comes in first, with a huge 29 out of 39 vulnerabilities found. The Pentest-Tools.com Website Vulnerability Scanner detected the second highest number of vulnerabilities behind them. Rapid7, with 19 vulns, and Acunetix, with 18, land on the third and fourth place respectively.

The main classes of problems for not finding some vulnerabilities were:

We detected only 4/12 XSS issues, compared to Burp which detected 11/12 (kudos to Gareth and James for their great work!). The reason for our results were some spidering issues and that we only report an XSS if the payload successfully executes in a headless browser. While we detected some issues, the vulnerabilities required user interaction before the payload would execute.

Our spider had some bugs on edge cases, which resulted in some vulnerable endpoints not reaching the Active Scanner.

Our Passive Scanner is missing some detection modules for identifying IP addresses, file system paths, SQL statements in responses. For the past two years, most of our detection efforts went to improving the Active Scanner. This serves as a lesson that we should give the Passive Scanner some love as well.

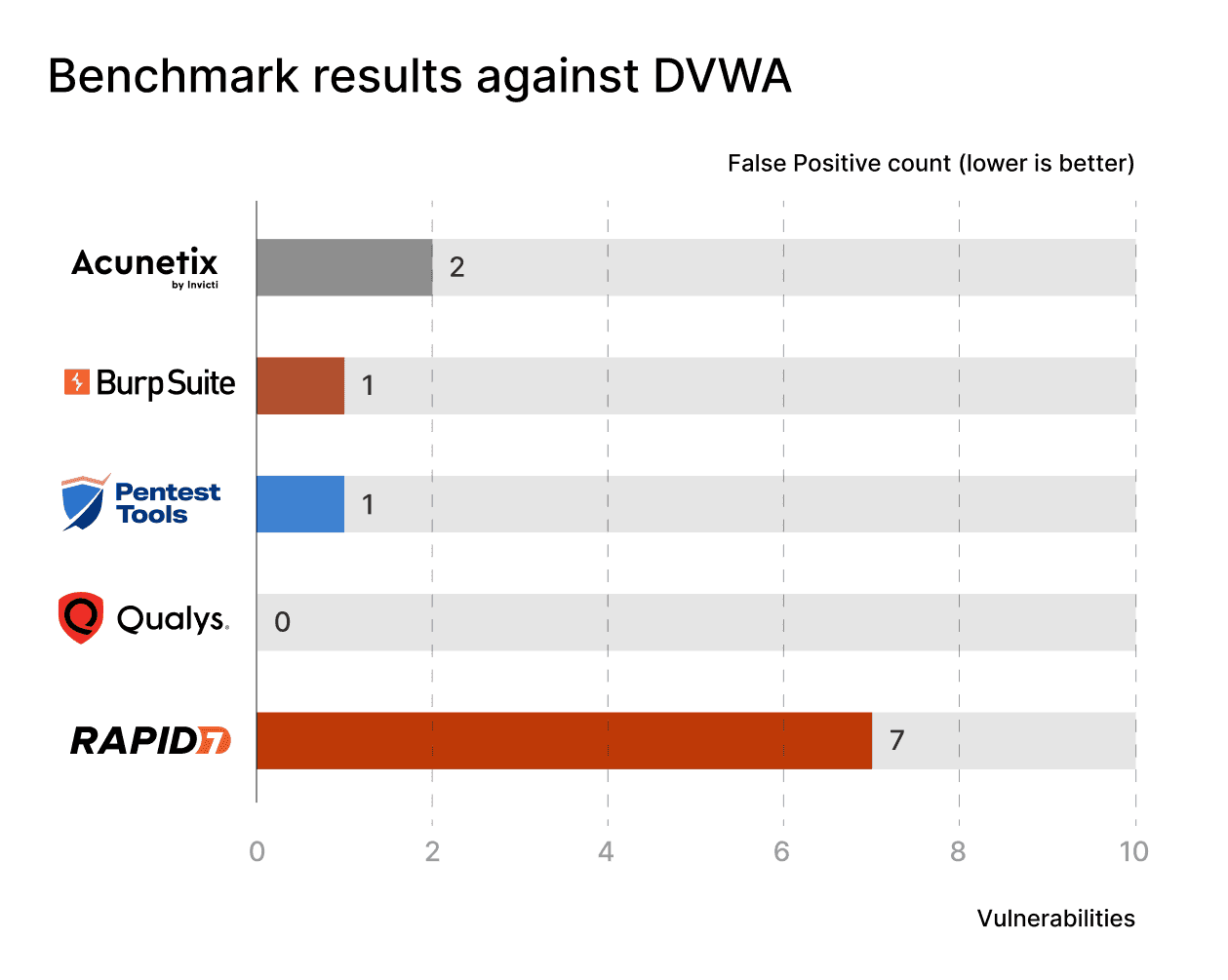

When it comes to false positives, things were both the same as for Broken Crystals and also wildly different.

The careful reader will notice that ZAP is gone from this comparison. We decided to do this since it reported false positives on a whole another scale compared to the other scanners: it detected 88 SQL injections that were not actually there. This matches our experience and is actually one of the reasons why we decided to shift from ZAP to building an in-house scanner in 2020.

Find all the data (about targets, scan settings, methodology, and more!) in this comprehensive white paper, which you can download for free (no email required).

A comprehensive benchmark of web app vulnerability scanners 2024

How we plan to use these results

Having had the chance to use six different scanners in a short period of time, we were able to get both an objective and subjective view of what we could do better.

In no particular order, we are going to spend some time on:

Improving our spider. We can be better at simulating an actual user interacting with Single Page Applications. Specifically, we’re going to figure out how we can chain interactions, like clicks, with the application. This will help us reach pages we are not currently crawling, like submenu items where the DOM is interactively modified on each click.

Better integrate the REST and GraphQL Scanners into the Website Vulnerability Scanner. We wanted to give our users the flexibility of carrying out a specific scan type. There are users for which an integrated, all-in-one approach makes sense. We will still leave you with the option to choose, but you will also be covered on all fronts by running only the Pentest-Tools.com Website Vulnerability Scanner.

Run the Active Scanner detectors on variables in a GraphQL request. Right now, we only report GraphQL specific vulnerabilities, but it would be cool if we could take a GraphQL query or mutation and look for classic server-side vulnerabilities in the variables of those requests. SSRF via a GraphQL variable coming to a Scan Report near you!

Run continuous benchmarks. Benchmarking once a year or every quarter is nice, but we think the real value lies in continuously assessing your scanner on real-world targets. We’ll work on running our scanner continuously against bug bounty targets that allow it. The biggest difference will probably be a decrease in false positives. If we manage to add some human eyes to the process, there is a chance we might decrease false negatives as well.

Working on these will probably give us some nice stories to share, so stick around for that article!